Youtube is Removing Videos With Questionable Content

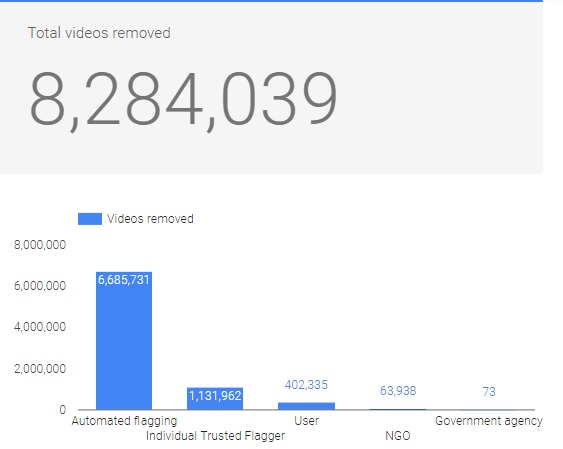

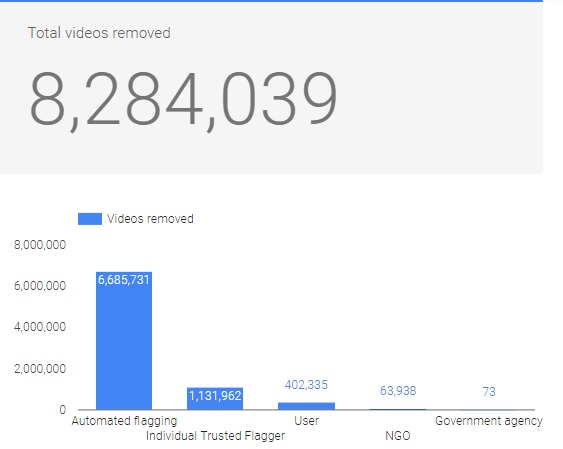

Youtube’s transparency report 2017, reveals that over 8 million videos were flagged as inapropriate from October to December, 2017. In the bid to maintain a safe and virant community it promises its audience, the online video sharing platform initiated structures that facilitates the flagging of content considered to contradict Youtube’s policy; automated flagging, and individual trusted flaggers.

Within 3 months, more than 6 million and 1 million videos have been flagged by the automated system and human flaggers respectively.

In Q4 of 2017, YouTube received human flags on 9.3 million unique videos in Q4 2017. The top 10 countries flagged by humans were mostly in Asia, North America, South America and the United Kingdom. Videos on the sharing platform could be flagged for different reasons. According to th Youtube community guidelines, content disallowed on the platform, and liable to flagging cut across pornography, incitement to violence, harassment, or hate speech.

Flags from human detection usually comes from a user or a member of YouTube’s Trusted Flagger program members which include individuals, NGOs, and government agencies that are particularly effective at notifying the platform of content that violates our Community Guidelines.

You may visit the transparency report site for more information.